I’ve been very slowly learning Mandarin. I use a mixture of Duolingo and Anki, but realistically, this is a pretty shit way to learn. Duolingo is good for gamifying the hell out of language learning and occasionally learning some new words, but it does not come close to being sufficient to gain even a beginner’s grasp of the language. Anki is awesome, but is no substitute for working out the “conversation” muscles. What I really need is access to a corpus of all kinds of Chinese writing and Chinese content that I can consume. Unfortunately accessing the Chinese side of the internet is annoyingly difficult without a Chinese phone number. There’s also not a lot of interesting content at my level (mostly my fault), so the end result is I consume little native media.

The good news is there is an obvious fix, :magic_wand: AI :magic_wand:. A typical model is trained on a few TB of (human) web data, which it then uses to synthesize a response. The models are mostly free on Huggingface, and a surprisingly little amount of compute power is necessary to run inference (that is, to run the model and ask it questions). Tools like llama.cpp have made GPUs less of a strict requirement; you can get OK performance on a reasonably spec’d gaming machine. If you have NVIDIA hardware with a ton of VRAM, you can get much, much better performance.

This is painfully obvious for anyone plugged into LLMs, but I am writing this for people who want to learn a language, not people who do work with LLMs all day. I’m not a huge fan of the many startups providing a simple wrapper over someone else’s model and charging $50 a month for it, so I might as well show you how to do it for free.

Setup, prerequisites

I’m running this on Ubuntu 22.04. It should work on MacOS, (and Windows via WSL) but YMMV. We need the latest release of ollama, and yi-34b-chat.

Ollama

Install ollama from here, or pipe straight to bash like a real cowboy/girl:

curl https://ollama.ai/install.sh | sh

Once installed, run with ollama serve. You won’t have any models installed, so nothing interesting should happen.

Yi-34b

Yi is a LLM trained by 01.ai on a corpus of English and Chinese language text. This makes it uniquely suited to our task: acting a Chinese language tutor. There are other models, such as deeplearn, that could be a good fit, but my results have not been great.

It probably cost 01.ai several million USD to train this model (and they give it away for free), so thanks 01.ai.

To get the model:

ollama run yi:34b-chat

This model was packed up into a repo by Ollama’s maintainer. You can also import arbitrary GGUF models if needed, see below.

Prompts

Prompt engineering is a whole art, but here’s an example of what it can do. The more detail you can cram into the prompt, the better.

Example:

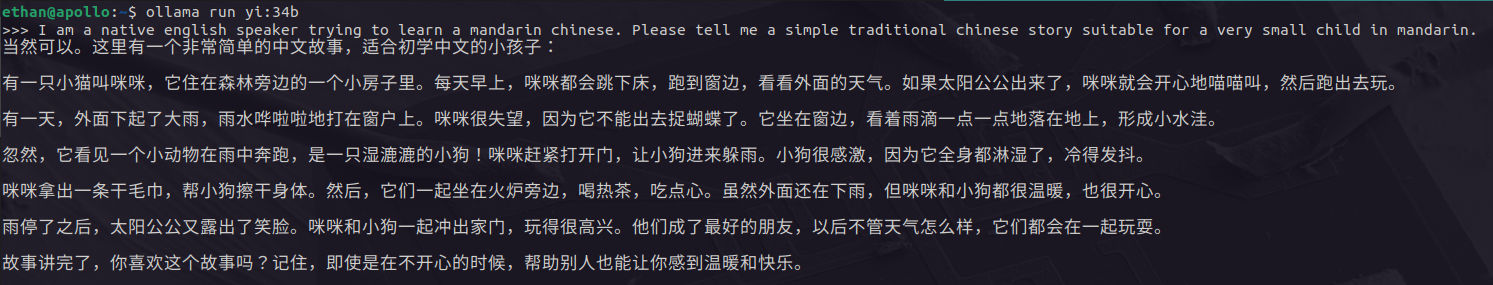

I am a native english speaker trying to learn a mandarin chinese. Please tell me a simple traditional chinese story suitable for a very small child in mandarin.

当然可以。这里有一个非常简单的中文故事,适合初学中文的小孩子: 有一只小猫叫咪咪,它住在森林旁边的一个小房子里。每天早上,咪咪都会跳下床,跑到窗边,看看外面的天气。如果太阳公公出来了,咪咪就会开心地喵喵叫,然后跑出去玩。 一天,外面下起了大雨,雨水哗啦啦地打在窗户上。咪咪很失望,因为它不能出去捉蝴蝶了。它坐在窗边,看着雨滴一点一点地落在地上,形成小水洼。 忽然,它看见一个小动物在雨中奔跑,是一只湿漉漉的小狗!咪咪赶紧打开门,让小狗进来躲雨。小狗很感激,因为它全身都淋湿了,冷得发抖。 咪咪拿出一条干毛巾,帮小狗擦干身体。然后,它们一起坐在火炉旁边,喝热茶,吃点心。虽然外面还在下雨,但咪咪和小狗都很温暖,也很开心。 雨停了之后,太阳公公又露出了笑脸。咪咪和小狗一起冲出家门,玩得很高兴。他们成了最好的朋友,以后不管天气怎么样,它们都会在一起玩耍。 故事讲完了,你喜欢这个故事吗?记住,即使是在不开心的时候,帮助别人也能让你感到温暖和快乐。

What a nice story. It’s not winning a Caldecott medal anytime soon, but it’s servicable. You can make the story more complicated, or even simpler, just by asking followup questions.

This took about a minute to generate on a Ryzen 5600x. It might take more or less time depending on your machine. If you have an Nvidia GPU at your disposal, it may return very quickly.

Troubleshooting

Too slow?

Buy a faster machine, or run a smaller model, or pay to run it on someone’s ludicrously fast machine.

Any of these things will improve generation speed. You can also wait – computing is only getting more efficient and mainstream devices are only getting faster. Running this model on bottom-of-the-barrel commodity hardware will be a piece of cake in a couple years.

Bad model?

You could also look for more sophisticated model, as there will almost certainly be a better model by the time anyone reads this post.

If you do find a better model, you can always box it up and send it to Ollama yourself. You can even use a non-chat model and create a custom prompt for a generic model to really fine-tune the experience and results. See Ollama GGUF import guide here.